前两张图采用本质矩阵计算RT的方法,然后三角测量计算三维坐标。具体原理可以百度。

后面的增量图采用PnP的方法。通过计算图2图3匹配点和图1图2匹配点的公共部分,而图1和图2已经重建完成了,这些公共部分都是有点云坐标的,则直接PnP求解RT。

#pragma once#include <opencv2xfeatures2dnonfree.hpp>#include <opencv2features2dfeatures2d.hpp>#include <opencv2highguihighgui.hpp>#include <opencv2calib3dcalib3d.hpp>#include <fstream>//#include <opencv2>#include <iostream>#include <vector>using namespace std;using namespace cv;class ImageBag{public:ImageBag(Mat, Mat);~ImageBag();void SetKeyPoints(vector<KeyPoint>, Mat);void SetStructIdx(vector<int>);//公用参数Mat Image;Mat K;vector<KeyPoint> Key_points;Mat Descriptor;Mat R;Mat T;//特征点所对应的空间点在点云中的索引vector<int> Correspond_struct_idx;private:};ImageBag::ImageBag(Mat image, Mat k){Image = image;K = k;}ImageBag::~ImageBag(){if (!Image.empty()){Image.release();}if (!K.empty()){K.release();}}void ImageBag::SetStructIdx(vector<int> index){Correspond_struct_idx = index;}void ImageBag::SetKeyPoints(vector<KeyPoint> keyPoints, Mat descriptor){Key_points = keyPoints;Descriptor = descriptor;//根据keyPoints来初始化Correspond_struct_idxfor (int i = 0; i < keyPoints.size(); i++){Correspond_struct_idx.push_back(-1);}}

以上是每一张图的实体类,包含了每一个图的一些信息。后面代码写起来会比较清晰。

#pragma once#include <opencv2xfeatures2dnonfree.hpp>#include <opencv2features2dfeatures2d.hpp>#include <opencv2highguihighgui.hpp>#include <opencv2calib3dcalib3d.hpp>#include <fstream>#include "ImageBag.h"//#include <opencv2>#include <iostream>using namespace cv;using namespace std;static class _3DBuilder{public:_3DBuilder();~_3DBuilder();static void ExtractFeatures(vector<ImageBag>&);static void MatchFeatures(Mat, Mat, vector<DMatch>&);static void GetMatchedPoints(vector<KeyPoint>, vector<KeyPoint>, vector<DMatch>, vector<Point2f>&, vector<Point2f>&);static bool FindTransform(Mat, vector<Point2f>, vector<Point2f>, Mat&, Mat&, Mat&);static void MaskoutPoints(vector<Point2f>&, Mat&);static void Reconstruct(Mat&, Mat&, Mat&, Mat&, Mat&, vector<Point2f>&, vector<Point2f>&, vector<Point3f>&);private:};_3DBuilder::_3DBuilder(){}_3DBuilder::~_3DBuilder(){}#pragma region 方法//检测特征点void _3DBuilder::ExtractFeatures(vector<ImageBag>& imageBagVector){//读取图像,获取图像特征点,并保存//Ptr<SIFT> ss = siftdtc.create(0, 3, 0.04, 10);for (int i = 0;i<imageBagVector.size();i++){Mat image = imageBagVector[i].Image;if (image.empty()){continue;}vector<KeyPoint> key_points;Mat descriptor;//偶尔出现内存分配失败的错误//Ptr <SURF> detector = SIFT::create();Ptr <SIFT> detector = SIFT::create();detector->detectAndCompute(image, noArray(), key_points, descriptor);//特征点过少,则排除该图像if (key_points.size() <= 10){continue;}imageBagVector[i].SetKeyPoints(key_points, descriptor);}}void _3DBuilder::MatchFeatures(Mat query, Mat train, vector<DMatch>& matches){vector<vector<DMatch>> knn_matches;BFMatcher matcher(NORM_L2);matcher.knnMatch(query, train, knn_matches, 2);//获取满足Ratio Test的最小匹配的距离float K = 0.6;float min_dist = FLT_MAX;for (int r = 0; r < knn_matches.size(); ++r){//Ratio Testif (knn_matches[r][0].distance > K*knn_matches[r][1].distance)continue;float dist = knn_matches[r][0].distance;if (dist < min_dist) min_dist = dist;}matches.clear();for (size_t r = 0; r < knn_matches.size(); ++r){//排除不满足Ratio Test的点和匹配距离过大的点if (knn_matches[r][0].distance > K*knn_matches[r][1].distance ||knn_matches[r][0].distance > 5 * max(min_dist, 10.0f))continue;//保存匹配点matches.push_back(knn_matches[r][0]);}}//取matches中匹配上的点void _3DBuilder::GetMatchedPoints(vector<KeyPoint> p1,vector<KeyPoint> p2,vector<DMatch> matches,vector<Point2f>& out_p1,vector<Point2f>& out_p2){out_p1.clear();out_p2.clear();for (int i = 0; i < matches.size(); ++i){out_p1.push_back(p1[matches[i].queryIdx].pt);out_p2.push_back(p2[matches[i].trainIdx].pt);}}//取RTbool _3DBuilder::FindTransform(Mat K, vector<Point2f> p1, vector<Point2f> p2, Mat& R, Mat& T, Mat& mask){//根据内参矩阵获取相机的焦距和光心坐标(主点坐标)double focal_length = 0.5*(K.at<double>(0) + K.at<double>(4));Point2d principle_point(K.at<double>(2), K.at<double>(5));//根据匹配点求取本征矩阵,使用RANSAC,进一步排除失配点Mat E = findEssentialMat(p1, p2, focal_length, principle_point, RANSAC, 0.999, 1.0, mask);if (E.empty()) return false;double feasible_count = countNonZero(mask);cout << (int)feasible_count << " -in- " << p1.size() << endl;//对于RANSAC而言,outlier数量大于50%时,结果是不可靠的if (feasible_count <= 15 || (feasible_count / p1.size()) < 0.6)return false;//分解本征矩阵,获取相对变换int pass_count = recoverPose(E, p1, p2, R, T, focal_length, principle_point, mask);//同时位于两个相机前方的点的数量要足够大if (((double)pass_count) / feasible_count < 0.7)return false;return true;}void _3DBuilder::MaskoutPoints(vector<Point2f>& p1, Mat& mask){vector<Point2f> p1_copy = p1;p1.clear();for (int i = 0; i < mask.rows; ++i){if (mask.at<uchar>(i) > 0)p1.push_back(p1_copy[i]);}}void _3DBuilder::Reconstruct(Mat& K, Mat& R0, Mat& T0, Mat& R, Mat& T, vector<Point2f>& p1, vector<Point2f>& p2, vector<Point3f>& xyz){//两个相机的投影矩阵[R T],triangulatePoints只支持float型//两个相机的投影矩阵[R T],triangulatePoints只支持float型Mat proj1(3, 4, CV_32FC1);Mat proj2(3, 4, CV_32FC1);R0.convertTo(proj1(Range(0, 3), Range(0, 3)), CV_32FC1);T0.convertTo(proj1.col(3), CV_32FC1);R.convertTo(proj2(Range(0, 3), Range(0, 3)), CV_32FC1);T.convertTo(proj2.col(3), CV_32FC1);Mat fK;K.convertTo(fK, CV_32FC1);proj1 = fK*proj1;proj2 = fK*proj2;//三角重建Mat s;triangulatePoints(proj1, proj2, p1, p2, s);xyz.clear();xyz.reserve(s.cols);for (int i = 0; i < s.cols; ++i){Mat_<float> col = s.col(i);col /= col(3); //齐次坐标,需要除以最后一个元素才是真正的坐标值xyz.push_back(Point3f(col(0), col(1), col(2)));}}void get_objpoints_and_imgpoints(vector<DMatch>& matches,vector<int>& struct_indices,vector<Point3f>& structure,vector<KeyPoint>& key_points,vector<Point3f>& object_points,vector<Point2f>& image_points){object_points.clear();image_points.clear();for (int i = 0; i < matches.size(); ++i){int query_idx = matches[i].queryIdx;int train_idx = matches[i].trainIdx;int struct_idx = struct_indices[query_idx];if (struct_idx < 0) continue;object_points.push_back(structure[struct_idx]);image_points.push_back(key_points[train_idx].pt);}}#pragma endregion

上面是方法类。

#include <opencv2xfeatures2dnonfree.hpp>#include <opencv2features2dfeatures2d.hpp>#include <opencv2highguihighgui.hpp>#include <opencv2calib3dcalib3d.hpp>#include <fstream>#include "ImageBag.h"#include "3DBuilder.h"//#include <opencv2>#include <iostream>using namespace cv;using namespace std;void main(){vector<Point3f> All_structure;vector<Point3f> All_structureRGB;ofstream outfile("E:a学习资源与代码三维重建SFM3DReconstructCPP - 多视角x64DebugXYZ.txt", ios::trunc);string imagePath = "E:a学习资源与代码三维重建SFM3DReconstructCPP - 多视角x64DebugImage";//内参矩阵/*Mat K(Matx33d(2759.48, 0, 1520.69,0, 2764.16, 1006.81,0, 0, 1));*/Mat K(Matx33d(1520.4, 0.000000, 302.320000,0.000000, 1525.900000, 246.870000,0, 0, 1));//读图vector<ImageBag> ImageBags;int imageCount = 0;while (true){string imageFile = imagePath + to_string(imageCount)+".png";struct stat buffer;//判断图片是否存在if (stat(imageFile.c_str(), &buffer) != 0){break;}Mat image = imread(imageFile);ImageBag imageBag = ImageBag(image, K);ImageBags.push_back(imageBag);imageCount++;}//提取特征_3DBuilder::ExtractFeatures(ImageBags);for (int i = 0; i < ImageBags.size(); i++){for (int j = i+1; j < ImageBags.size(); j++){//两两开始比较vector<DMatch> matches;//特征匹配_3DBuilder::MatchFeatures(ImageBags[i].Descriptor, ImageBags[j].Descriptor, matches);if (matches.size() < 10){//匹配的点数过少continue;}if (i == 0){vector<Point2f> p1, p2;Mat R, T;//旋转矩阵和平移向量Mat mask;//mask中大于零的点代表匹配点,等于零代表失配点_3DBuilder::GetMatchedPoints(ImageBags[i].Key_points, ImageBags[j].Key_points, matches, p1, p2);_3DBuilder::FindTransform(K, p1, p2, R, T, mask);if (R.dims == 0){continue;}ImageBags[j].R = R;ImageBags[j].T = T;_3DBuilder::MaskoutPoints(p1, mask);_3DBuilder::MaskoutPoints(p2, mask);if (p1.size() == 0){continue;}vector<Point3f> xyz;Mat R0 = Mat::eye(3, 3, CV_64FC1);Mat T0 = Mat::zeros(3, 1, CV_64FC1);_3DBuilder::Reconstruct(K, R0, T0, R, T, p1, p2, xyz);//根据mask来记录各个点和点云的关系。int idx = 0;for (int r = 0; r < matches.size(); r++){if (mask.at<uchar>(r) > 0){ImageBags[i].Correspond_struct_idx[matches[r].queryIdx] = All_structure.size() + idx;ImageBags[j].Correspond_struct_idx[matches[r].trainIdx] = All_structure.size() + idx;idx++;}}//将xyz记录进All_structurefor (int s = 0; s < xyz.size(); s++){All_structure.push_back(xyz[s]);}}else {//不是0号图的话 就要使用PnP方法,进行像素点到世界点的匹配//先找出对应点vector<Point2f> p1, p2;Mat R, T;//旋转矩阵和平移向量_3DBuilder::GetMatchedPoints(ImageBags[i].Key_points, ImageBags[j].Key_points, matches, p1, p2);//然后遍历所有的match点,看那些已经有世界坐标了vector<int> pointWorldPositionIndex;vector<int> pointImagePositionIndex;for (int k = 0; k < matches.size(); k++){if (ImageBags[i].Correspond_struct_idx[matches[k].queryIdx] > 0){pointWorldPositionIndex.push_back(ImageBags[i].Correspond_struct_idx[matches[k].queryIdx]);pointImagePositionIndex.push_back(matches[k].trainIdx);}}if (pointWorldPositionIndex.size() <= 8){//对应点过少 无法求解continue;}//vector<Point3f> object_points;vector<Point2f> image_points;for (int k = 0; k < pointWorldPositionIndex.size(); k++){object_points.push_back(All_structure[pointWorldPositionIndex[k]]);image_points.push_back(ImageBags[j].Key_points[pointImagePositionIndex[k]].pt);}solvePnPRansac(object_points, image_points, K, noArray(), R, T);//将旋转向量转换为旋转矩阵Rodrigues(R, R);vector<Point3f> next_structure;_3DBuilder::Reconstruct(K, ImageBags[i].R, ImageBags[i].T, R, T, p1, p2, next_structure);ImageBags[j].R = R;ImageBags[j].T = T;//根据mask来记录各个点和点云的关系。int idx = 0;for (int r = 0; r < matches.size(); r++){ImageBags[i].Correspond_struct_idx[matches[r].queryIdx] = All_structure.size() + idx;ImageBags[j].Correspond_struct_idx[matches[r].trainIdx] = All_structure.size() + idx;idx++;}for (int k = 0; k < next_structure.size();k++){All_structure.push_back(next_structure[k]);}//}}}/*ofstream outfile;outfile.open(".XYZ.txt", ios::binary | ios::app | ios::in | ios::out);*/for (int k = 0; k < All_structure.size(); k++){outfile << All_structure[k].x << " "<< All_structure[k].y<<" "<< All_structure[k].z;outfile << "n";}outfile.close();}

主函数。内参已经在程序中写出了。

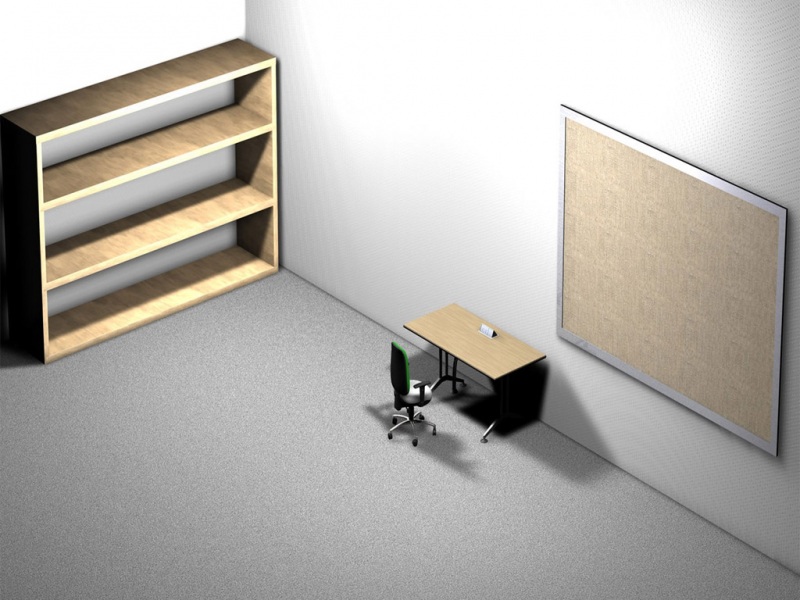

使用的图如下:

重建后的效果:

知乎视频

附件一直传不了,不知道是不是bug了。需要原图的私聊吧!